An Artificial Intelligence agent can be described over five different types, where here it is performed a comprehensive description:

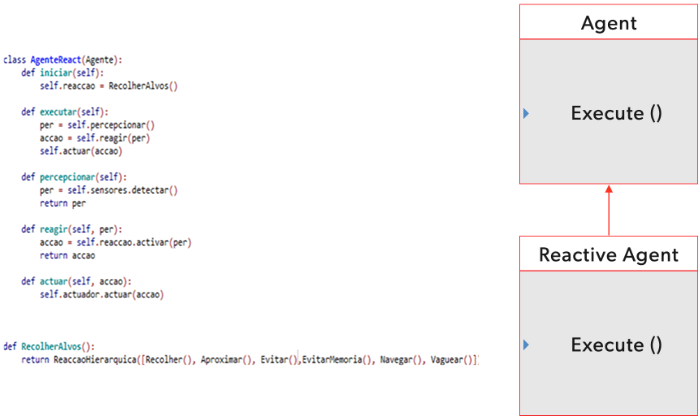

Reactive agents

Reactive agents or designated reactive architectures, try not to use any complex symbolic model or reasoning and make decisions "in real-time". This type of architecture is based on developing intelligence for the agent, based only on the interaction with the environment, without needing a pre-established model, based on a very limited set of information and simple rules to act that allows selecting a behaviour. The agents based on this architecture obtain information from their sensors that are used directly in the decision-making process, with no symbolic representation of the world being created. Reactive agents may or may not have memory. Reactive agents that have memory have the possibility of representing temporal dynamics.

Deliberative agents

Deliberative agents or deliberative architectures follow the classic approach of artificial intelligence. Here agents act with little autonomy and have explicit symbolic models of the environments that surround them. These architectures widely interpret agents as part of a knowledge-based system. The decisions of the agents (which action to take) are made through logical reasoning. The agent has an internal representation of the world and an explicit mental state that can be modified by changing the environment.

Search in a state-space

Search in a state-space is one of the most used techniques for solving AI problems. The idea is to have an agent capable of carrying out actions that change the current state. Thus, given an initial state (initial position of the agent), a set of actions that the agent can perform and an objective (final state) a problem is defined. The resolution of the problem consists of a sequence of actions that were performed by the agent, "transporting him" from the initial state to final (objective) state.

When searching for data space, we must define a problem. This definition consists of the process of deciding what possible actions can be taken by the agent, an initial state, an end state (objective) and, depending on the problem, the possible states.

Having the problem, we must look for the best solution to solve it (from the initial to the final state). This solution can be found through different algorithms that receive a problem as input and return a solution in the form of a sequence of actions. A solution is the sequence of actions found, through a search mechanism, that led to the problem satisfaction.

Markov decision processes

The Markov decision process is a way of modelling processes where the transitions between states are probabilistic. It is possible to observe what state the process is in, and it is possible to intervene in the process periodically by performing actions. Each action has a reward (or cost), which depends on the state of the process.

Modelled processes that conform to Markov properties are characterized by the effect of an action on a state, depending only on the action and the current state of the system, not on how the process reached the current state. These are called "decision-making" processes because they model the possibility of an agent interfering in the system by carrying out actions.

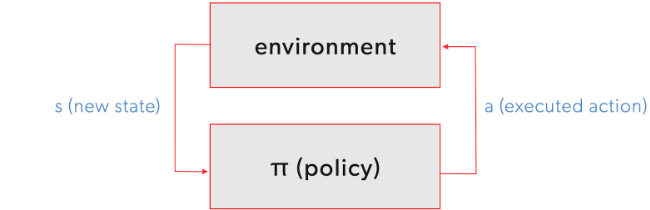

The figure below shows the functioning dynamics of a system modelled as a Markov decision process.

Figure 1 - Modelling a system with a Markov decision process